Responsible AI in nanorobotics means designing systems that prioritize safety, transparency, and ethical standards from the start. You should focus on embedding fail-safes, privacy protections, and clear decision-making processes to prevent harm. Regular testing, oversight, and updates are essential to keep risks under control. By following these principles, you guarantee nanorobots benefit society ethically. Keep exploring to discover how to incorporate these safety measures effectively.

Key Takeaways

- Integrate ethical principles and societal values into nanorobot design to prevent harm and promote transparency.

- Implement comprehensive safety testing, continuous monitoring, and fail-safe mechanisms to ensure reliable operation.

- Enforce strict regulatory oversight and regular review to maintain compliance and adapt to technological advancements.

- Promote open communication, stakeholder education, and interdisciplinary collaboration for informed decision-making.

- Proactively anticipate challenges, embed moral considerations, and ensure nanorobots serve humanity ethically and responsibly.

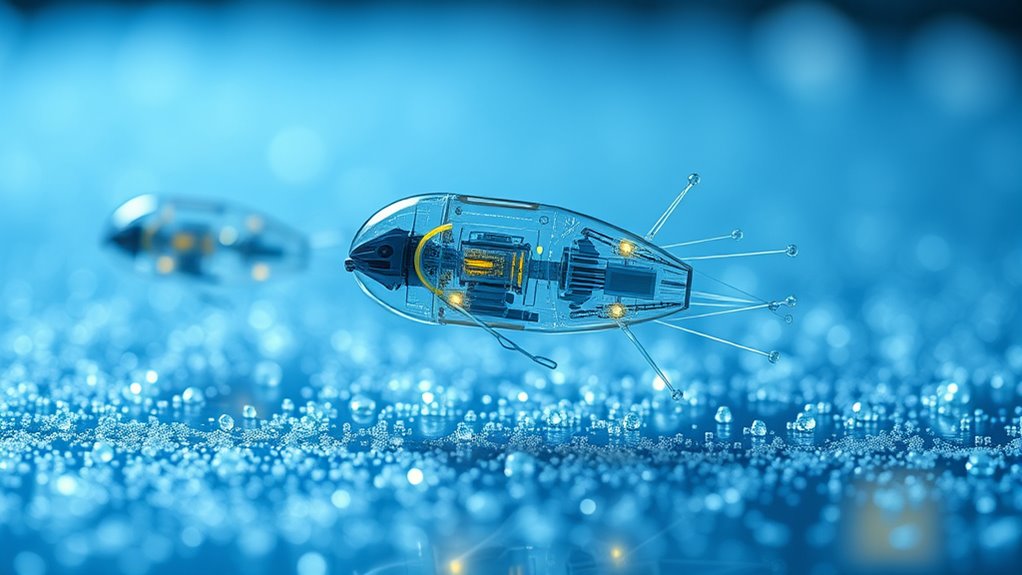

How can we guarantee that AI-driven nanorobots operate safely and ethically as they become more integrated into medicine and industry? The answer lies in prioritizing ethical design and implementing rigorous safety protocols. As nanorobots become more capable and autonomous, the responsibility falls on developers, regulators, and users to ensure they function within strict moral and safety boundaries. Ethical design involves embedding principles that prevent harm, promote transparency, and respect human rights from the very start of development. You need to consider not just what the nanorobots can do, but also what they should do, ensuring that their purpose aligns with societal values and legal standards. This means incorporating fail-safes, privacy safeguards, and clear decision-making processes into their design. Safety protocols become the backbone of responsible nanorobotics, dictating how these tiny machines are tested, deployed, and monitored. You should develop comprehensive testing procedures that simulate real-world conditions, identifying potential failure points before the nanorobots reach actual users. Once in operation, continuous monitoring systems are essential to detect anomalies or malfunctions early, preventing unintended consequences. Establishing robust safety protocols also involves setting limits on what nanorobots can interact with or modify, ensuring they don’t cause irreversible damage or interference. Regulatory frameworks should enforce strict compliance, with oversight bodies regularly reviewing protocols and data to maintain safety standards. Transparency plays a crucial role here; you need open communication about how nanorobots are designed, tested, and used, so stakeholders can assess risks and benefits accurately. Educating users and operators about safe handling procedures further reduces potential misuse or accidental harm. Ethical design also extends to addressing bias and ensuring equitable access, so the benefits of nanorobotics don’t disproportionately favor certain groups. As the technology evolves, adaptive safety protocols must be established to keep pace with new capabilities and emerging risks. You should foster interdisciplinary collaboration, involving ethicists, engineers, medical professionals, and policymakers, to continuously evaluate and improve safety measures. Additionally, understanding diverse design options from the outset can help prevent unintended biases or limitations in nanorobot capabilities. Ultimately, responsible AI in nanorobotics demands a proactive approach—anticipating challenges before they occur and embedding safety and ethics into every stage of development. Only through deliberate, well-informed efforts can you ensure that these powerful tools serve humanity safely, ethically, and effectively, paving the way for a future where nanorobotics enhances lives without compromising moral integrity.

Top picks for "responsible nanorobotic"

Open Amazon search results for this keyword.

As an affiliate, we earn on qualifying purchases.

Frequently Asked Questions

How Does Responsible AI Address Unintended Nanorobot Behaviors?

You can address unintended nanorobot behaviors by implementing robust behavior monitoring systems that detect anomalies early. Ethical frameworks guide your decision-making, ensuring actions align with safety and moral standards. By continuously observing nanorobot activities and applying these frameworks, you prevent harmful outcomes and adapt responses promptly. This proactive approach helps maintain control, fostering trust and safety in nanorobotics applications, ultimately minimizing risks associated with unexpected behaviors.

What Are the Privacy Concerns With Nanorobot Data Collection?

You should be aware that nanorobot data collection raises privacy concerns because sensitive information might be exposed or misused. To protect privacy, you can rely on nanorobot encryption to secure data and implement data anonymization techniques to prevent identification. These measures help make certain that personal details remain confidential, minimizing risks of data breaches or misuse, and fostering trust in nanorobotics applications.

How Can Bias Be Minimized in Nanorobot Decision-Making?

Imagine steering a boat through fog; clear navigation depends on your tools. To minimize bias in nanorobot decision-making, you should prioritize algorithm fairness and bias mitigation. Regularly audit algorithms for fairness, diversify training data, and incorporate transparency measures. These steps guarantee nanorobots make equitable decisions, reducing bias. Like a skilled captain, you guide your nanorobots safely, avoiding pitfalls caused by hidden biases that can lead to unfair or harmful outcomes.

What Are the Global Regulations for Responsible Nanorobot Deployment?

You should follow international standards and ethical frameworks that guide nanorobot deployment globally. These regulations emphasize safety, transparency, and accountability, making certain responsible use. Governments and organizations work together to develop policies that prevent misuse and promote innovation. By adhering to these guidelines, you can help ensure nanorobots are deployed ethically and safely, aligning with worldwide efforts to regulate emerging technologies responsibly.

How Is Transparency Maintained in Nanorobot AI Systems?

Think of nanorobot AI systems as a clear window into a complex world—you stay informed by ensuring algorithm accountability and engaging stakeholders at every step. You require transparent algorithms that reveal how decisions are made, like an open book. Regular audits and open communication keep this window clean, fostering trust. By actively involving stakeholders, you turn the system into a shared journey, not a mysterious maze, ensuring clarity and responsibility every step of the way.

Conclusion

As you advance in nanorobotics, remember that responsible AI acts as your guiding star, much like Prometheus bringing fire with caution. It’s your duty to harness this powerful tool ethically, ensuring it benefits humanity without unintended harm. By prioritizing safety, transparency, and accountability, you can shape a future where innovation and morality walk hand in hand. Let’s not be Prometheans who, in their hubris, ignite chaos—rather, be stewards of progress and trust.